Advanced Prompting Techniques

Introduction

Welcome to the world of advanced prompting techniques! In the ever-evolving field of AI and product management, the way we interact with language models can significantly impact the quality of our outputs. This article delves into the nuances of structured prompting, providing you with actionable insights and techniques to elevate your prompting game. Whether you’re a seasoned professional or just starting, these advanced strategies will help you harness the full potential of generative AI.

Advanced Prompting Techniques

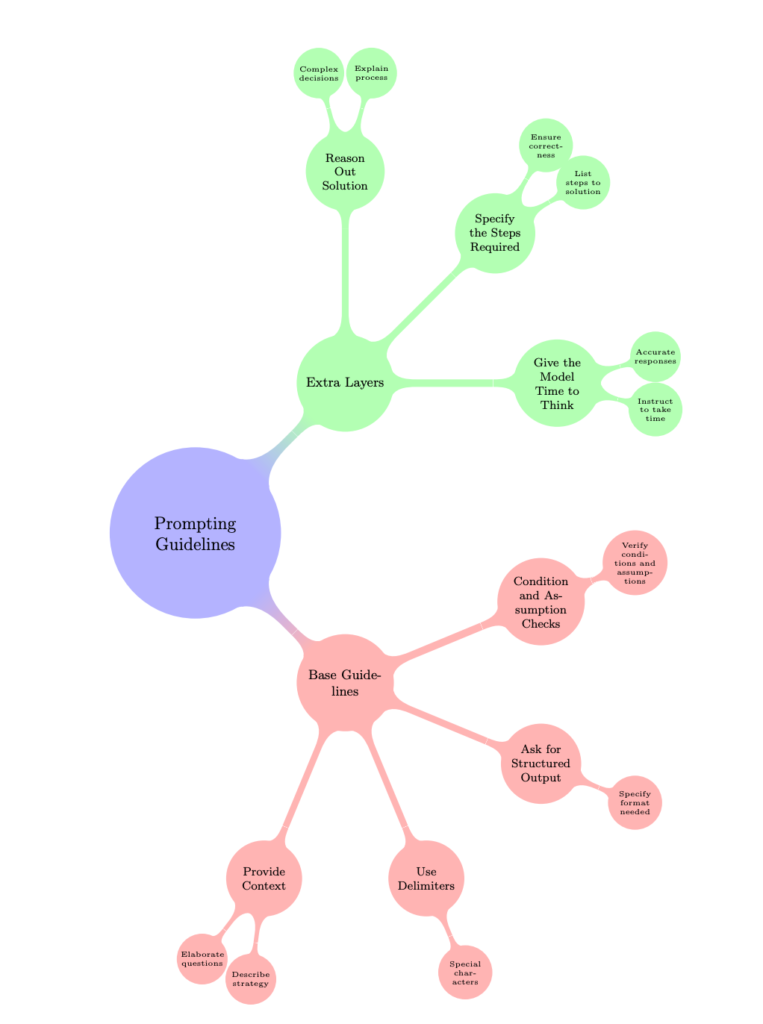

In the previous article, we looked at the following base guidelines for prompting:

-

- Provide Context: Ask elaborate questions and provide context. Instead of saying, “Give me a strategy document,” describe what kind of strategy you need, the scope, and any specific points you want to cover.

-

- Use Delimiters: These are special characters or phrases that help separate different parts of your prompt, making it easier for the model to understand where instructions begin and end.

-

- Ask for Structured Output: If you need information in a specific format (like a list, a summary, or a detailed report), specify this in your prompt.

-

- Condition and Assumption Checks: Instruct the model to verify if certain conditions are met or if specific assumptions hold before proceeding with a task. This step is crucial for tasks that require accuracy and detail.

Adding a Few Extra Layers to the Structured Prompting Technique

- Give the Model Time to Think: Language models often produce quick responses that may not be entirely accurate. By instructing the model to “take your time before responding,” you allow it to process the query more thoroughly. This results in more thoughtful and accurate responses. For instance, if you ask for a detailed analysis, adding this instruction can prompt the model to pause and consider multiple aspects before generating the output.

- Specify the Steps Required to Complete a Task: Generative AI is powerful but not omniscient; it relies on its training data. When asking the model to solve a problem, such as a mathematical query, specify that it should “list the steps” used to reach the solution. This not only ensures transparency in how the answer was derived but also helps in verifying the correctness of each step. For example, a prompt like “Solve the equation and show all steps involved” yields a more reliable and understandable result than simply asking for the answer.

- Instruct the Model to Reason Out Its Solution Before Coming to a Conclusion: Encourage the model to articulate its reasoning process. This can be done by asking it to explain its thought process before providing a final answer. This technique is particularly useful for complex decision-making tasks or when the solution requires a rationale. For example, if you ask, “What is the best strategy for market entry?” you could add, “Please explain your reasoning before concluding.” This ensures a more comprehensive and reasoned response.

Prompting Techniques

What we’ve discussed so far are general ideas and techniques on how to write better prompts, but the world of prompt engineering is more nuanced. There are several techniques already available, and it’s an ever-growing list.

I’ll list down 10 popular techniques of prompting, and we’ll go through each one in detail:

- Zero-shot prompting

- Few-shot prompting

- Tree of thought prompting

- Chain of thought prompting

- Role Goal Context prompting

- Perspective prompting

- Critique prompting

- Comparative prompting

- Generate knowledge prompting

- Multimodal chain of thought prompting

We’ll dive into each of these prompting techniques one at a time. Before we jump into the world of prompting techniques, please understand that all these may not be useful or applicable to everyone.

Deep Dive:

Zero-shot Prompting

Zero-shot prompting refers to a technique where the LLM can perform the task at hand without any prior instruction or examples on how to perform the task. The response is solely based on the model’s pre-existing training and knowledge.

Let’s take a look at a couple of examples:

Prompt:

Write a brief note thanking someone for a birthday gift.Response:

Thank you so much for the wonderful birthday gift! I truly appreciate your thoughtfulness and kindness. It means a lot to me, and I just wanted to express my gratitude. Looking forward to seeing you soon!

Prompt:

What’s the capital of India?Response:

The capital of India is New Delhi.Conclusion

As we explore the fascinating world of advanced prompting techniques, it’s important to remember that these strategies are tools to enhance the interaction with language models. By providing clear instructions, specifying steps, and encouraging thoughtful responses, you can significantly improve the quality and accuracy of the outputs. Stay tuned as we dive deeper into each prompting technique, equipping you with the knowledge to leverage AI more effectively in your professional endeavours. Happy prompting!

Next article: Few-shot prompting (coming soon)